Be Evil

0“Don’t be evil” hasn’t been an official part of Google’s corporate code of conduct since 2018. The motto had been around since 2000, even serving as the wifi password on the shuttles that the company uses to ferry its employees to its Mountain View headquarters. In 2018 it was removed, having been replaced by the less direct stricture, “do the right thing.” Android Central reports that Google has made a change reminiscent of the removal of “don’t be evil” to its AI principles statement.

The company has changed its promise of AI responsibility and no longer promises not to develop AI for use in dangerous tech. Prior versions of Google’s AI Principles promised the company wouldn’t develop AI for “weapons or other technologies whose principal purpose or implementation is to cause or directly facilitate injury to people” or “technologies that gather or use information for surveillance violating internationally accepted norms.” Those promises are now gone.

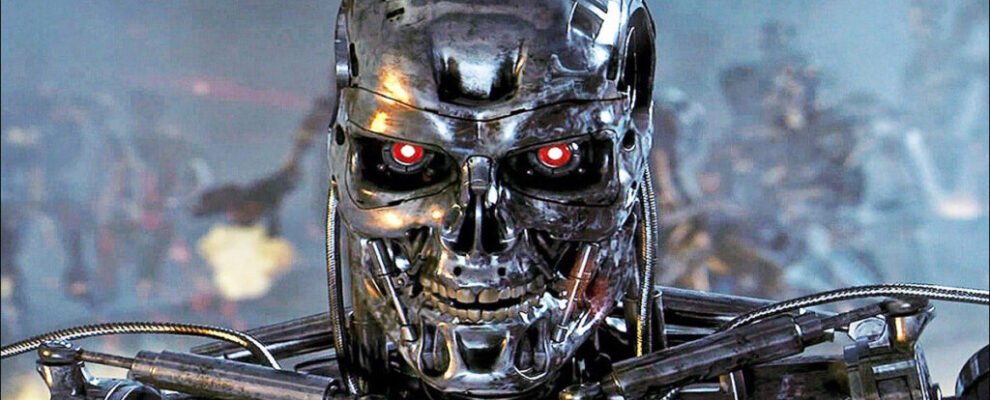

If you’re not great at deciphering technobabble public relations pseudo-languages, that means making AI for weapons and spy “stuff.” It suggests that Google is willing to develop or aid in the development of software that could be used for war. Instead of Gemini just drawing pictures of AI-powered death robots, it could essentially be used to help build them.