Be Evil

0“Don’t be evil” hasn’t been an official part of Google’s corporate code of conduct since 2018. The motto had been around since 2000, even serving as the wifi password on the shuttles that the company uses to ferry its employees to its Mountain View headquarters. In 2018 it was removed, having been replaced by the less direct stricture, “do the right thing.” Android Central reports that Google has made a change reminiscent of the removal of “don’t be evil” to its AI principles statement.

The company has changed its promise of AI responsibility and no longer promises not to develop AI for use in dangerous tech. Prior versions of Google’s AI Principles promised the company wouldn’t develop AI for “weapons or other technologies whose principal purpose or implementation is to cause or directly facilitate injury to people” or “technologies that gather or use information for surveillance violating internationally accepted norms.” Those promises are now gone.

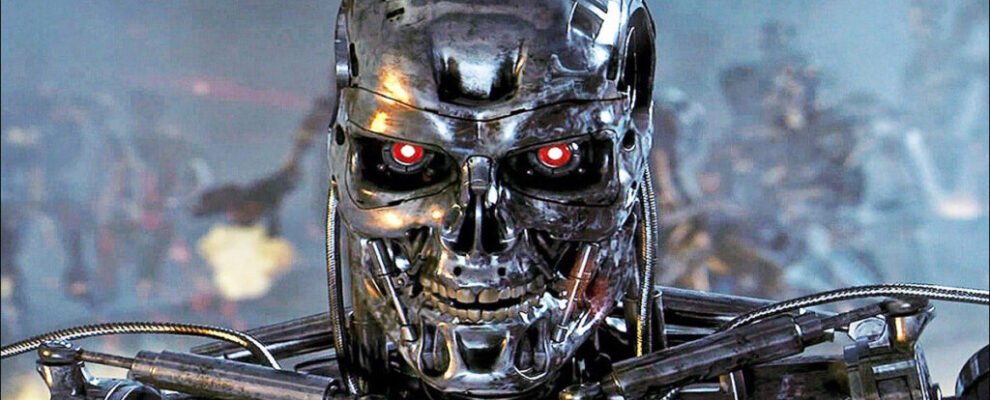

If you’re not great at deciphering technobabble public relations pseudo-languages, that means making AI for weapons and spy “stuff.” It suggests that Google is willing to develop or aid in the development of software that could be used for war. Instead of Gemini just drawing pictures of AI-powered death robots, it could essentially be used to help build them.

The change is significant, reflecting the company’s…evolution on the whole evil thing.

In 2018, the company declined to renew the “Project Maven” contract with the government, which analyzed drone surveillance, and failed to bid on a cloud contract for the Pentagon because it wasn’t sure these could align with the company’s AI principles and ethics.

Then in 2022, it was discovered that Google’s participation in “Project Nimbus” gave some executives at the company concerns that “Google Cloud services could be used for, or linked to, the facilitation of human rights violations.” Google’s response was to force employees to stop discussing political conflicts like the one in Palestine.

The formerly-stated principles weren’t exactly carved in stone in practice, Wired suggests (paywall).

After the initial release of its AI principles roughly seven years ago, Google created two teams tasked with reviewing whether projects across the company were living up to the commitments. One focused on Google’s core operations, such as search, ads, Assistant, and Maps. Another focused on Google Cloud offerings and deals with customers. The unit focused on Google’s consumer business was split up early last year as the company raced to develop chatbots and other generative AI tools to compete with OpenAI.

Timnit Gebru, a former colead of Google’s ethical AI research team who was later fired from that position, claims the company’s commitment to the principles had always been in question. “I would say that it’s better to not pretend that you have any of these principles than write them out and do the opposite,” she says.

If not Google, then someone else, certainly, and it’s in the world’s best interest that democracies keep up in the worldwide race to develop practical Artificial Intelligence. Given that, I suppose this whole thing is just sentimentality. You just hate to see a company you use and rely on walk back a pledge not to do harm, you know?